AI Models & Deployments

What is an AI Model?

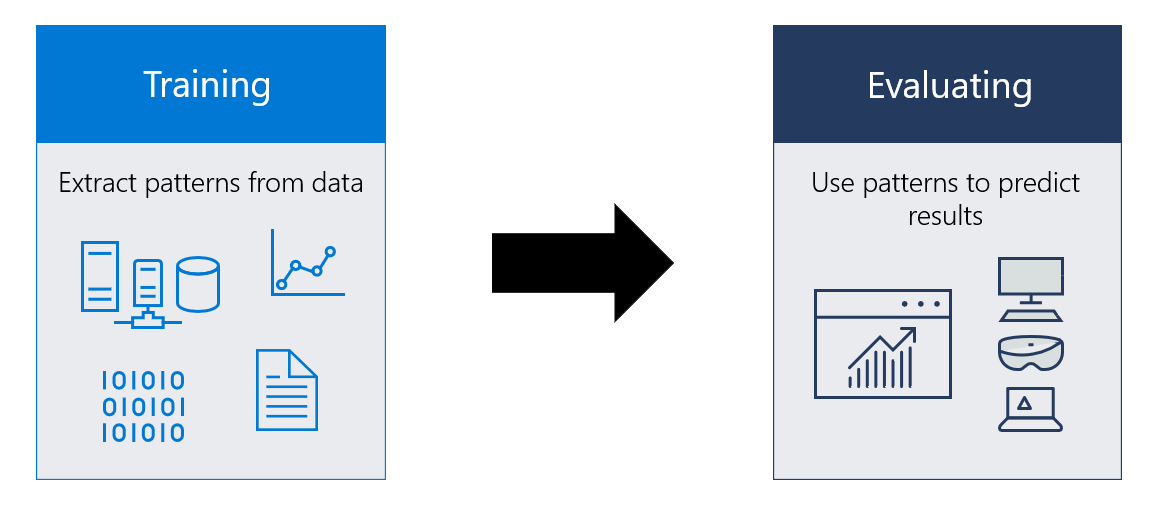

An AI model (or machine learning model) is a program that has been trained on a set of data, to recognize certain types of patterns. Training the model defines an algorithm that the AI can use to reason over new data and make predictions.

What is a Large Language Model?

A large language model (LLM) is a type of AI that can process and produce natural language text, having been trained on massive amounts of data from diverse sources. A "foundation model" refers to a specific instance or version of an LLM. We'll cover these topics in more detail in the next lesson.

What are Embeddings?

An embedding is a special data representation format that machine learning models and algorithms can use more easily. It provides an information-dense representation of the semantic meaning of text data as a vector of floating point numbers. The distance between embeddings in vector space correlates directly to the semantic similarity between their (original) text inputs.

Embeddings help us use vector search methods for more efficient querying of text data. For example: it powers vector similarity search in databases like Azure Cosmos DB for MongoDB vCore. The recommended embedding model is currently text-embedding-ada-002.

What Model should I use?

There are many considerations when choosing a model.

- Model pricing (by tokens, by artifacts)

- Model availability (by version, by region)

- Model performance (evaluation metrics)

- Model capability (features & parameters)

As a general guide, we recommend the following:

- Start with gpt-35-turbo. This model is very economical and has good performance. It's commonly used for chat applications (such as OpenAI's ChatGPT) but can be used for a wide range of tasks beyond chat and conversation.

- Move to gpt-35-turbo-16k, gpt-4 or gpt-4-32k if you need to generate more than 4,096 tokens, or need to support larger prompts. These models are more expensive and can be slower, and have limited availability, but they are the most powerful models available today. We'll cover tokenization in more detail in a later lesson.

- Consider embeddings for tasks like search, clustering, recommendations and anomaly detection.

- Use DALL-E (Preview) for generating images from text prompts that the user provides, unlike previous models where the output is text (chat).

- Use Whisper (Preview) for speech-to-text conversion or audio transcription. It's trained and optimized for transcribing audio files with English speech, though it can transcribe speech in other languages. The model output is in English text. Use it to rapidly transcribe individual audio files or for translating audio from other languages into English - given prompt-based guidance.

What is Azure OpenAI (AOAI)

OpenAI has a diverse set of language models that can "generate" different types of content (text, images, audio, code) from a user-provided natural language text input or "prompt". The Azure OpenAI Service provides access to these OpenAI models over a REST API.

Currently available models include GPT-4, GPT-4 Turbo Preview, GPT-3.5, Embeddings, DALL-E (Preview) and Whisper (Preview). Azure OpenAI releases new versions regularly to keep pace with OpenAI updates on foundational models. Developers can access them programmatically (using a Python SDK) or via the browser (using Azure AI Studio).